What’s Jagged Intelligence, Really?

Why Your Super-Smart Chatbot Thinks 9.11 > 9.9

Imagine you hire someone for a job. In the interview, they solve a ridiculously hard puzzle that stumps everyone else. You are impressed and you are excited that you have managed to hire someone who is just brilliant.

Then on day one, you ask them to count the chairs in the conference room. They say 14. You count. There’s 17. You ask again. They say 15. What?

That’s jagged intelligence. Being weirdly superhuman at hard stuff while failing spectacularly at easy stuff.

That’s AI right now.

Why Does This Happen?

Here’s the thing most people miss. AI doesn’t actually understand anything. It’s not thinking. It’s pattern-matching at an insane scale.

LLM’s have consumed the internet. Billions of documents. When you ask them something, they are essentially asking themselves: “Based on everything I’ve seen, what typically comes next?”

When the pattern is common? They nail it. Complex code, creative writing, summarizing research etc, all heavily represented in training data. Lots of examples to learn from.

But counting letters in “strawberry”? Nobody writes essays about that. There’s no rich pattern to exploit. So the model just... guesses. Badly.

The Examples That Make It Click

Let’s get concrete.

Is 9.11 greater than 9.9? Humans instantly know 9.9 is bigger. But AI sees these as strings of characters, not numbers with meaning. “9.11” has more digits after the decimal. Pattern-wise, that might signal “bigger.” Wrong conclusion, but you can see how it got there.

The tic-tac-toe mess. You’d think AI would crush a simple game, right? Sometimes it makes illegal moves. Puts an X where there’s already an O. Why? Because it’s not actually playing the game. It’s generating text that looks like a tic-tac-toe move. Subtle but crucial difference.

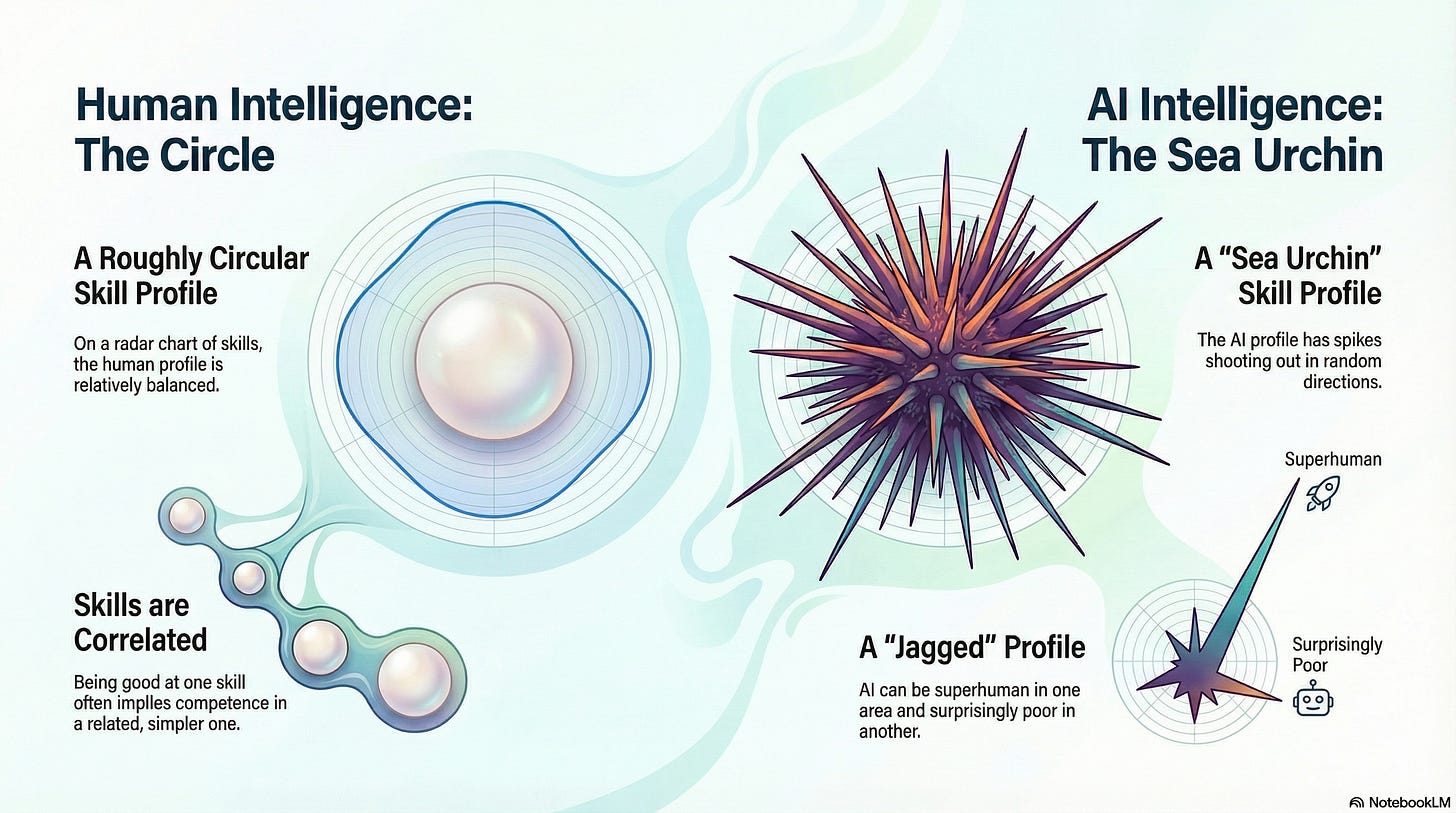

The Radar Chart Mental Model

Karpathy gave us a perfect visual for this.

Imagine a radar chart where each spoke represents a different skill. For humans, the shape is roughly circular. Maybe lumpy in places, but correlated. Good at advanced math? Probably fine at basic arithmetic too.

For AI? The chart looks like a sea urchin. Spikes shooting out in random directions. Superhuman here, embarrassingly bad there. No correlation between adjacent skills.

That’s the “jagged” part. The intelligence profile isn’t smooth. It’s spiky and unpredictable.

Why Should Anyone Care?

Here’s where it gets real.

If you’re using AI to write marketing copy, jagged intelligence is fine. Worst case, you get a weird sentence and fix it.

But what about medical diagnosis? Legal analysis? Self-driving cars? Loan approvals?

In those contexts, you need consistent reliability. Not “brilliant 97% of the time, catastrophically wrong 3%.” That 3% might be the moment someone’s life depends on it.

For businesses deploying AI, this creates a trust gap. You can’t just plug it in and walk away. You need guardrails. Validation layers. Human oversight. The spikiness means you’re never quite sure when it’ll fail.

So What’s Being Done About It?

Good news and bad news.

Good news: People are actively working on this. We’re seeing hybrid systems that combine learning with hard-coded rules. Models that can verify their own outputs. Training techniques that build better “self-knowledge” so the AI knows what it doesn’t know.

Sundar Pichai calls this the “AJI phase” - Artificial Jagged Intelligence. A transitional period before we get to something smoother and more reliable.

Bad news: It’s not clear that just scaling up fixes this. Bigger models still make the same weird mistakes. The fundamental issue is that pattern-matching isn’t reasoning. Until we crack that, the jags stay.